Enumerating .gov.af

by kpcyrd, medium read,

Due to recent political events there’s an increased interest in Afghanistan’s websites. This is a tutorial on how to run sn0int on .gov.af to enumerate as many sites as possible for archival purpose.

Installation

sn0int can be installed with pacman -S sn0int or brew install sn0int.

Enumerating an eTLD

We’re going to start sn0int in a new workspace that we call gov-af. This can be any name, it’s just a way to organize our data.

We’re then creating a gov.af domain object in sn0int so we can run investigations on it. This is technically not how the domain object is supposed to be used, because .gov.af is considered an eTLD, an effective top-level domain and listed on the “public suffix list”. The sn0int domain objects are supposed to be registerable domains. This usually means subdomains of eTLDs, like example.com, example.org or example.co.uk.

We’re still able to create a domain object for an eTLD manually that some modules might be able to work with.

The relevant commands in the video are

sn0int -w gov-af,add domain,select domains

There’s a public log of certificates (for security reasons), we can attempt to discover domains and subdomains from the certificates that have been recorded there. Instead of downloading the full copy of the log we’re using the api of crt.sh, a service that’s indexing the data. We need to install the module if we don’t already have it installed in sn0int. After installation we can run it on the target domain. This may take some time.

The relevant commands in the video are

pkg install kpcyrd/ctlogs,use ctlogs,target,run

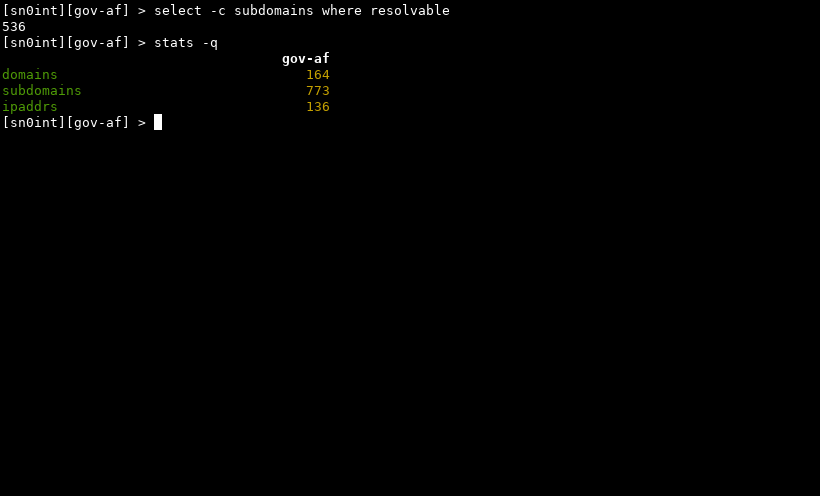

By default all known entities are targeted, you can double-check them using the target command. The discovered domains and subdomains are automatically recorded in sn0int for further processing. The stats of this workspace should be similar to this:

By searching through transparency logs we’ve discovered 164 domains and 773 subdomains. The transparency log we’re working with is append-only and unaffected by recent events.

Mass resolving all domains

This potentially contains lots of historical data too, we’re only interested in websites that are currently online.

The most practical way to determine which of those records are still in use is by attempting to resolve all of them. We’re going with run -j 12 so the UI fits the recording format, a reasonable value for real-life use would be -j 32. This run is going to take a few minutes and there are likely going to be some errors at the end (but that’s ok).

The relevant commands in the video are

pkg install kpcyrd/dns-resolve,use dns-resolve,run -j 12

Now we can filter and count by resolution status:

We’ve collected 536 resolvable subdomains, neat! We also learned about some ip addresses that are hosting the .gov.af websites we found.

Note: The numbers are from Friday 13th, at the time of editing the numbers changed to 420 resolvable subdomains and 127 ip addresses.

Mapping public network surface

If you place a recent copy of GeoLite2-ASN.mmdb at ~/.cache/sn0int we can use kpcyrd/asn to do ASN lookups for each ip address. ASN is short for Autonomous System Number. The number identifies an “administrative entity or domain” that has one ore more IP routing prefixes assigned to them. This is often big internet companies, but you can also find individuals and governments participating in this system. All lookups are done offline in the database we copied to ~/.cache/sn0int/.

The relevant commands in the video are

pkg install kpcyrd/asn,use asn,run -j 4

With that data we’re now able to generate some stats about the infrastructure:

2021-08-13

% sn0int -w gov-af select --json ipaddrs where asn | jq -r .as_org | sort | uniq -c

1 1&1 Ionos Se

1 A2HOSTING

1 Afghan Cyber ISP

22 AFGHANTELECOM GOVERNMENT COMMUNICATION NETWORK

14 AMAZON-02

1 AS-26496-GO-DADDY-COM-LLC

1 Asia Bridge Telecom

1 Awareness Software Limited

2 BIZLAND-SD

8 CLOUDFLARENET

1 CONFLUENCE-NETWORK-INC

2 Contabo GmbH

2 DIGITALOCEAN-ASN

2 DIMENOC

1 GOOGLE

1 Hetzner Online GmbH

1 Horizonsat FZ LLC

1 Host Europe GmbH

2 Hostinger International Limited

1 HVC-AS

2 Linode, LLC

12 MICROSOFT-CORP-MSN-AS-BLOCK

12 Ministry of Communication & IT

1 NAMECHEAP-NET

1 NCREN

2 Neda Telecommunications

1 OIS1

8 OVH SAS

1 PhoenixNAP

2 PUBLIC-DOMAIN-REGISTRY

1 SINGLEHOP-LLC

1 SOFTLAYER

16 UNIFIEDLAYER-AS-1

The data can be found at https://web.archive.org/web/20210813133313/https://paste.debian.net/plainh/16af4b2b.

2021-08-17

% sn0int -w gov-af select --json ipaddrs where asn | jq -r .as_org | sort | uniq -c

1 1&1 Ionos Se

1 A2HOSTING

14 AFGHANTELECOM GOVERNMENT COMMUNICATION NETWORK

13 AMAZON-02

1 AS-26496-GO-DADDY-COM-LLC

1 Awareness Software Limited

2 BIZLAND-SD

8 CLOUDFLARENET

1 CONFLUENCE-NETWORK-INC

2 Contabo GmbH

2 DIGITALOCEAN-ASN

2 DIMENOC

1 GOOGLE

1 Hetzner Online GmbH

1 Host Europe GmbH

2 Hostinger International Limited

1 HVC-AS

2 Linode, LLC

26 MICROSOFT-CORP-MSN-AS-BLOCK

2 Ministry of Communication & IT

1 NAMECHEAP-NET

1 NCREN

2 Neda Telecommunications

1 OIS1

8 OVH SAS

1 PhoenixNAP

2 PUBLIC-DOMAIN-REGISTRY

1 SINGLEHOP-LLC

1 SOFTLAYER

16 UNIFIEDLAYER-AS-1

The data can be found at https://web.archive.org/web/20210816230055/https://paste.debian.net/plainh/48ffd62e.

Further steps

So far we barely interacted with the network, we specifically didn’t port scan, we only sent a slightly elevated number of dns queries.

You can find other modules that might be useful to run wth pkg list --source domains and pkg list --source subdomains. You need to install some modules first, the fastest way to get started is using the quickstart command to install all featured modules.

If you discovered new subdomains and you don’t want to re-resolve all the other ones, use the following to select subdomains that have resolvable set to neither true or false:

[sn0int][gov-af] > use dns-resolve

[sn0int][gov-af][kpcyrd/dns-resolve] > target where resolvable is null

[+] 25 entities selected

[sn0int][gov-af][kpcyrd/dns-resolve] > run -j 32

If you’re planning to take this further and engage in “mild scanning”, you’d use the kpcyrd/url-scan module to attempt to send an http and an https request to every resolvable domain. You’d likely want to run this with concurrency (like -j 16 or -j 32).

[sn0int][gov-af] > use url-scan

[sn0int][gov-af][kpcyrd/url-scan] > target where resolvable

[+] 536 entities selected

[sn0int][gov-af][kpcyrd/url-scan] > # run -j 32

Afterwards you can get a list of all urls you got a response from:

[sn0int][gov-af] > select --values urls where status = 200

This can then get further ingested into tools like ArchiveBot or grab-site.